Meeting Topic

Background and Context. Open science is an umbrella term encompassing numerous approaches to knowledge creation and dissemination.[1] The term represents a broad movement to make research, data, and findings more transparent, accessible, and replicable throughout the research process—from design to dissemination. As highly publicized news stories and journal articles in recent years have cast doubt on research credibility, open science has gained momentum. The discourse has highlighted issues such as data manipulation (e.g., p-hacking), publication bias (e.g., no publication of null results), variation in reporting standards, research results that cannot be reproduced[2], and other individual and system-level practices.[3] Proponents of open science strive to transform research culture and support reproducible science through a range of practices such as pre-registering evaluation plans, providing open access to code and data, and replicating past findings.

The Office of Planning, Research, and Evaluation (OPRE) in the Administration for Children and Families (ACF) seeks to promote rigor, relevance, transparency, independence, and ethics in the conduct of research and evaluation. The ACF Evaluation Policy’s[4] focus on transparency demonstrates a commitment to open science evidenced by:

- Making information about planned and ongoing evaluations easily accessible;

- Publishing study plans in advance;

- Comprehensively presenting results (including favorable, unfavorable, and null findings); and

- Broadly disseminating results, regardless of the findings, in a timely manner.

As a key funder and regulator of scientific research, the Federal Government more broadly has also undertaken several efforts to explore and promote open science practices. For example, in 2013, the Office of Science and Technology Policy (part of the Executive Office of the President) issued a memorandum instructing all agencies with more than $100 million in research and development expenditures to “develop a plan to support increased public access to the results of research funded by the Federal Government.”[5] More recently, in 2017, the bipartisan U.S. Commission on Evidence-Based Policymaking issued a set of recommendations designed to improve data access, strengthen privacy protections, and enhance the Government’s capacity for evidence building.[6] Some of these recommendations were signed into law on January 14, 2019, as part of the Foundations for Evidence-Based Policy Making Act (P.L. 115-435). However, implementation of Federal guidelines has been inconsistent.[7] Agencies seeking to implement open science practices face various challenges, including cultural resistance and concerns about privacy and confidentiality, and require further support to follow new guidelines successfully. This meeting aims to assemble Federal research and evaluation offices and their partners to identify practices and methods that promote replicable, rigorous research. It will support agencies and programs seeking to implement new Federal guidelines by introducing relevant methods and providing a forum for agencies and partners to share best practices and discuss ways to overcome obstacles.

Meeting Topics and Goals. The 2019 research methods meeting provided attendees with a deeper understanding of methods for promoting and implementing open science in social policy research and evaluation. Speakers described promising methodological practices and shared their expertise applying these practices. The meeting included presentations and sparked discussions on the following questions:

- What is open science, and how can open science methods be used to make research more rigorous, transparent, replicable, and accessible to a broader range of stakeholders?

- In what ways can open science methods be applied throughout the research life cycle? For example, what methods might be applied at the planning, execution, and dissemination phases?

- What role do replication studies and meta-analyses play within the open science framework? How can we conduct rigorous replication studies and meta-analyses to build the social policy evidence base?

- What are the logistical and practical implications of implementing open science methods (e.g., budgeting, overcoming cultural resistance, addressing consent and privacy concerns)?

- How have open science methods been applied in the Federal context, and what can agencies learn from one another about best practices? How can Federal research and evaluation offices encourage the use of current open science methods?

The goals of the meeting follow:

- Introduce the principles of open science and relevant methods for social policy research.

- Encourage attendees to think critically about facilitators and barriers to implementing open science methods in the Federal context.

- Discuss ways to promote the use of open science methods in social policy research and evaluation moving forward (e.g., mechanisms to share successes, ideas for overcoming implementation obstacles).

Meeting Attendees and Logistics. The meeting convened Federal staff and researchers with an interest in exploring open science concepts and methodologies. It was held October 24, 2019, at the Holiday Inn Washington Capitol in Washington, DC. Approximately 150 participants and 15 speakers attended. Participants and speakers included representatives from Federal and State government, research firms, and academia.

References

[1] Fecher, B., & Friesike, S. (2014). Open science: One term, five schools of thought. In S. Bartling and S. Friesika (Eds.), Opening Science, 17–47. Springer Nature. Retrieved from https://link.springer.com/chapter/10.1007/978-3-319-00026-8_2

[2] Winerman, L. (2017). Trends report: Psychologists embrace open science. American Psychological Association, 48, 90. Retrieved from https://www.apa.org/monitor/2017/11/trends-open-science

[3] Winerman, L. (2017). Trends report: Psychologists embrace open science. American Psychological Association, 48, 90. Retrieved from https://www.apa.org/monitor/2017/11/trends-open-science

[4] 79 FR 51574

[5] Holdren, J. P. (2013). Memorandum for the heads of executive departments and agencies: Increasing access to the results of federally funded scientific research, p. 3. Executive Office of the President, Office of Science and Technology Policy. Retrieved from https://obamawhitehouse.archives.gov/sites/default/files/microsites/ostp/ostp_public_access_memo_2013.pdf

[6] Bipartisan Policy Center. (2017). The promise of evidence-based policymaking. Retrieved from https://bipartisanpolicy.org/commission-evidence-based-policymaking/

[7] National Academies of Sciences, Engineering, and Medicine. (2018). Open science by design: Realizing a vision for 21st century research. Washington, DC: Board on Research Data and Information, Policy, and Global Affairs. Retrieved from http://sites.nationalacademies.org/pga/brdi/open_science_enterprise/

Agenda and Presentations

9:00 – 9:15 a.m.

Naomi Goldstein (Office of Planning, Research, and Evaluation)

9:15 – 9:40 a.m.

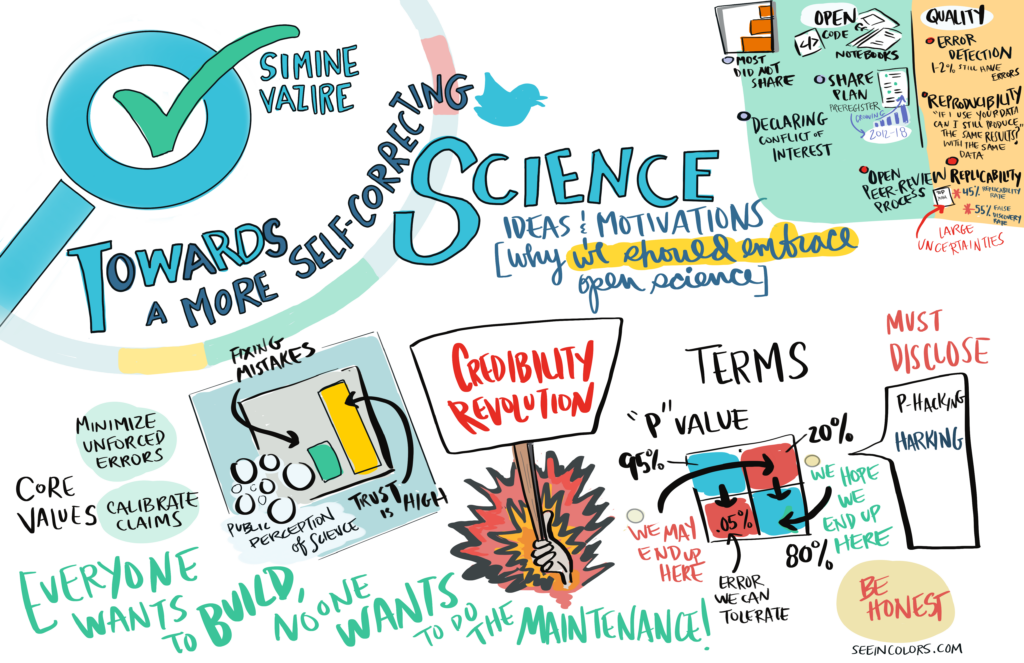

Slide Deck: Towards a More Self-Correcting Science

Simine Vazire (University of California, Davis)

9:40 – 10:00 a.m.

Erica Zielewski (Office of Management and Budget)

10:00 – 10:15 a.m.

10:15 – 11:30 a.m.

Slide Deck: Pre-Registration: What and Why

Katherine Corker (Grand Valley State University)Slide Deck: Preregistration, Preanalysis, and Reanalysis at OES

Ryan Moore (U.S. General Services Administration)

11:30 a.m. – 12:45 p.m.

12:45 – 2:00 p.m.

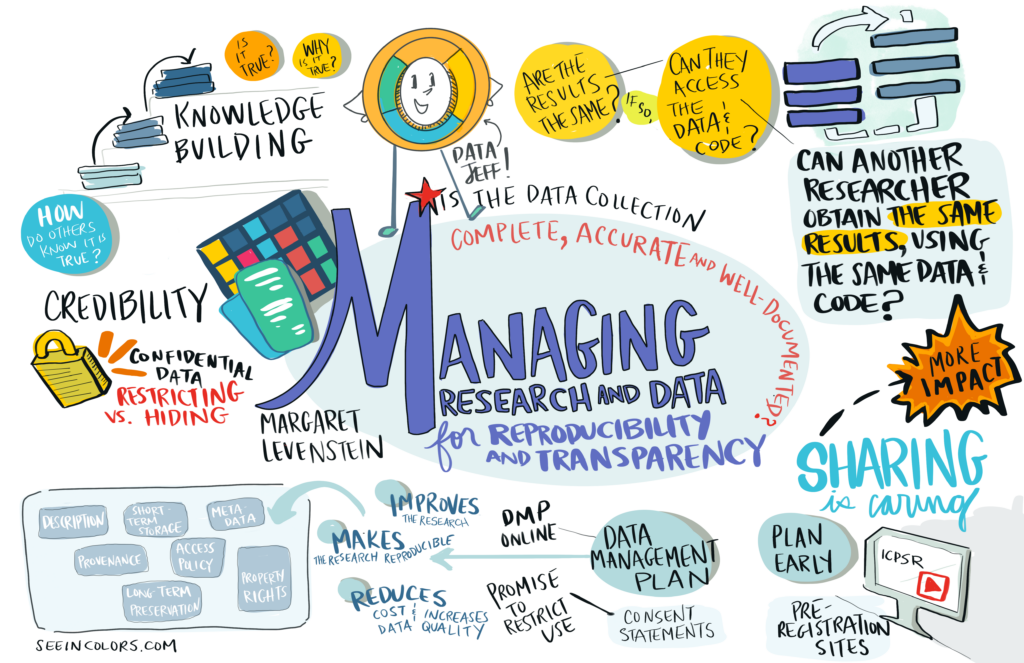

Slide Deck: Managing Research and Data for Reproducibility and Transparency

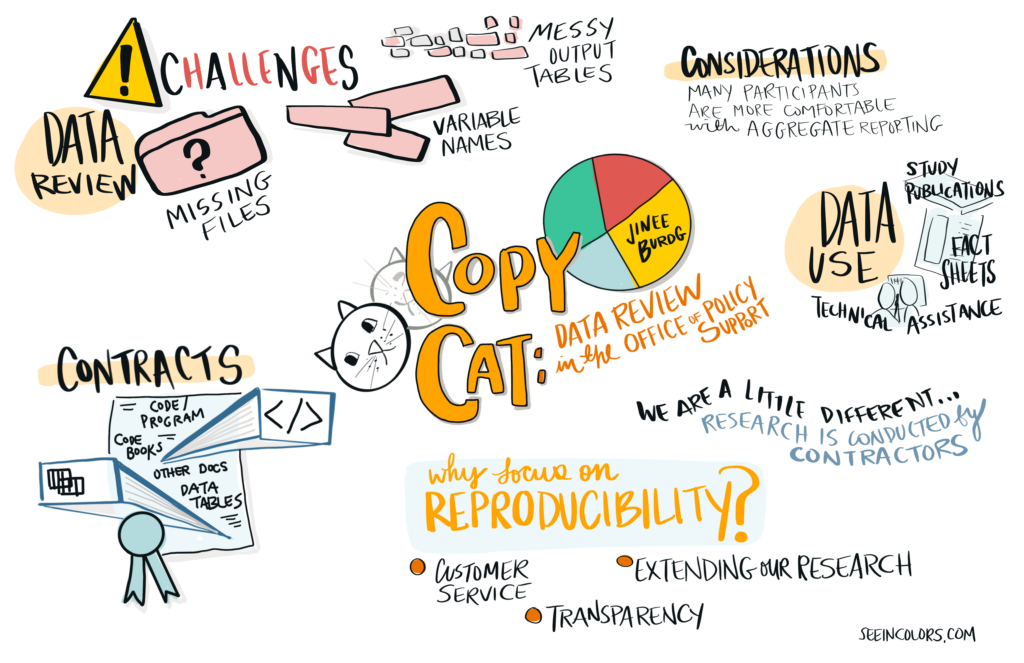

Margaret Levenstein (University of Michigan)Slide Deck: Copycat: Data Review in the Office of Policy Support

Jinee Burdg (U.S. Department of Agriculture)Facilitator:

Stuart Buck (Arnold Ventures)

2:00 – 3:15 p.m.

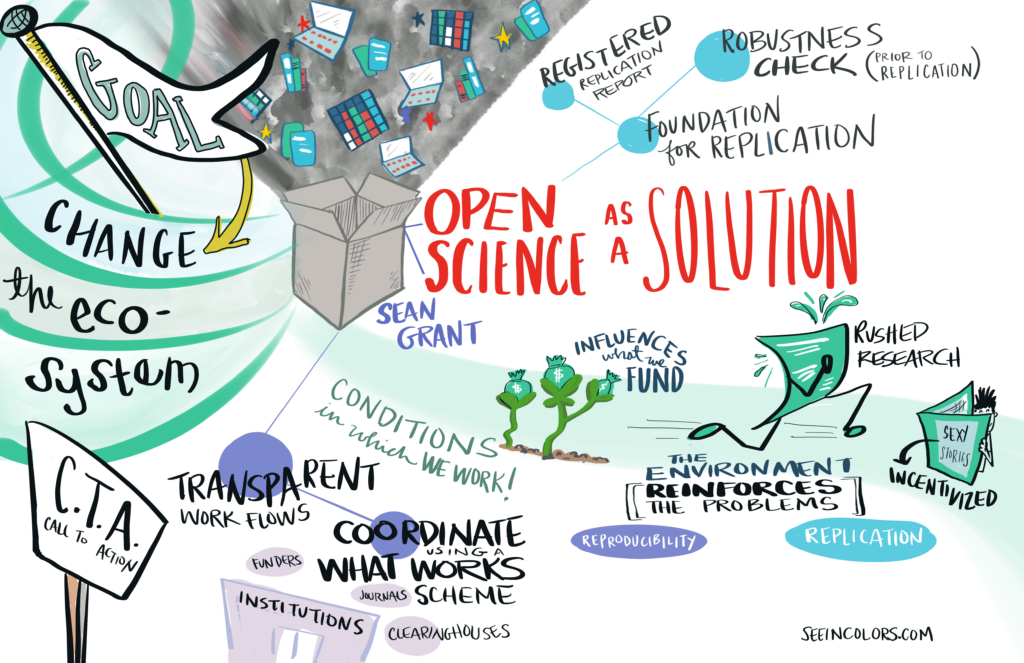

Slide Deck: Building and Synthesizing Evidence: Replication

Sean Grant (Indiana University)Slide Deck: Promoting Transparency and Replicability in Meta-Analysis

Emily Tanner-Smith (University of Oregon)

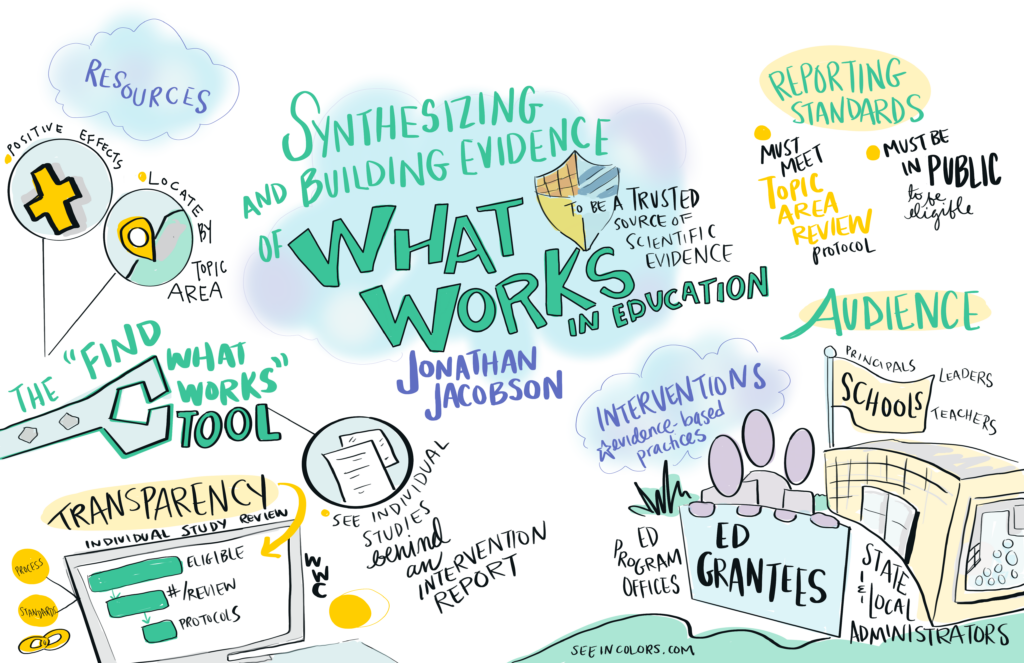

Slide Deck: Synthesizing and Building Evidence of “What Works” in Education

Jonathan Jacobson (U.S. Department of Education)

3:15 – 3:30 p.m.

3:30 – 4:45 p.m.

Panelists:

Michael Huerta (National Institutes of Health)

Jessica Lohmann (U.S. General Services Administration)

Emily Schmitt (Office of Planning, Research, and Evaluation)

David Yokum (Brown University)Moderator:

Erica Zielewski (Office of Management and Budget)

4:45 – 5:00 p.m.

Evening

All are welcome to continue the discussion at the 21st Amendment Bar & Grill (inside hotel). This is an informal, no-host gathering; drinks and food are available for purchase.

Meeting Products

Meeting Briefs and Resource Documents

Meeting Summary Brief: Methods for Promoting Open Science in Social Policy Research

2019 Methods Meeting Resource List

Meeting Videos

Opening Remarks: Towards a More Self-Correcting Science

Clip: The Credibility Revolution

Closed Caption Version

Audio Description Version

Sketch Notes

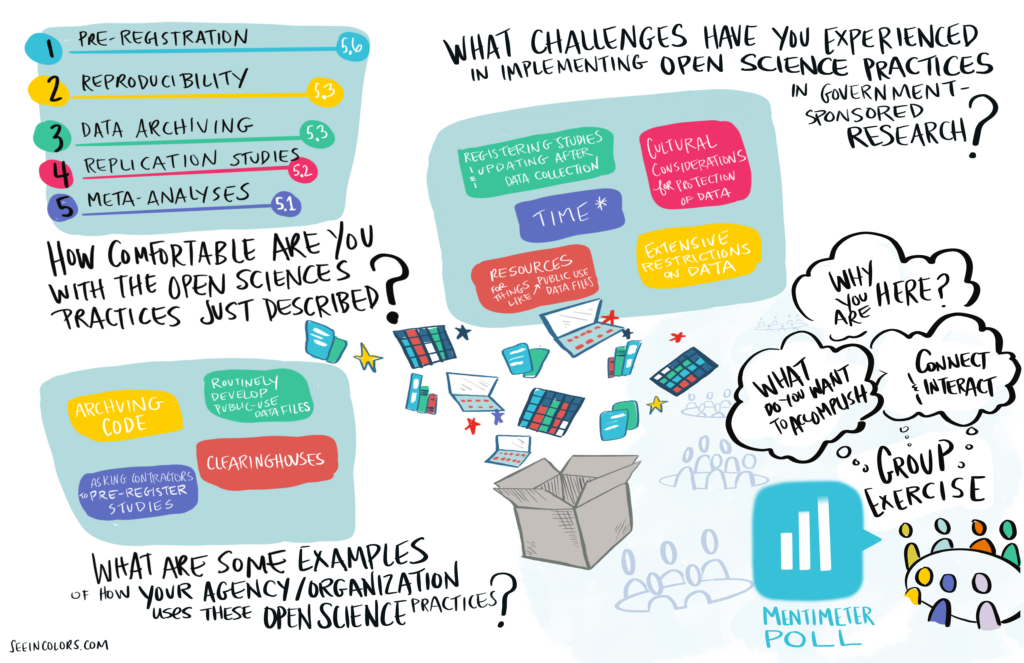

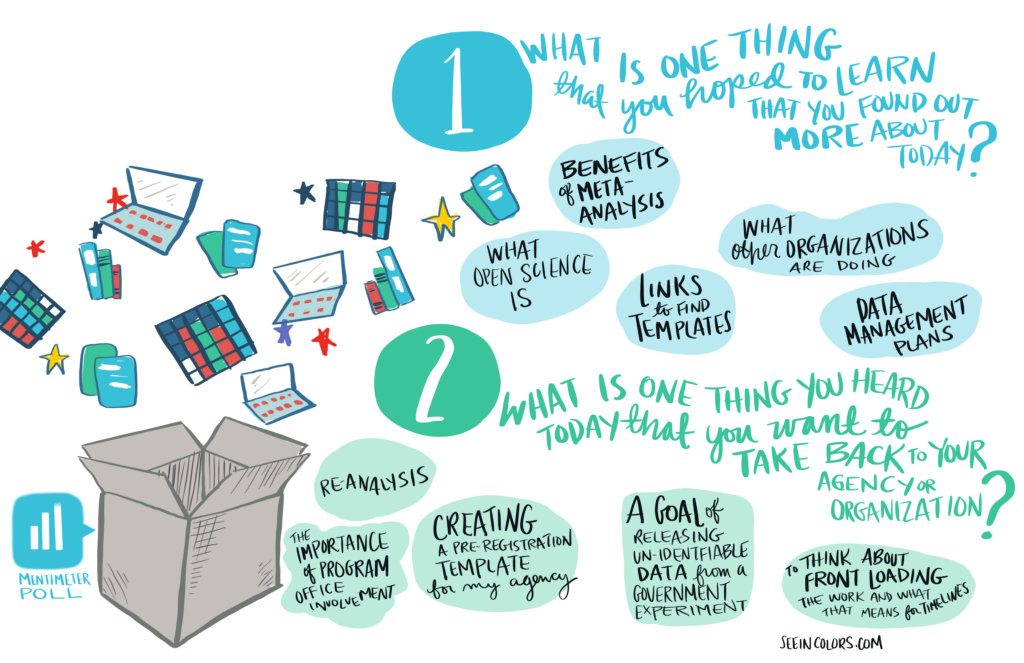

Session 1: Group Exercise

Sketch Notes

Session 2: Planning for Analysis and Pre-registration

Full Presentation: Pre-registration: What and Why

Closed Caption Version

Audio Description Version

Clip: Pre-registration: What and Why

Closed Caption Version

Audio Description Version

Sketch Notes

Session 3: Reproducibility

Clip: What is Reproducibility?

Closed Caption Version

Audio Description Version

Session Sketch Notes

Session 4: Buidling and Synthesizing Evidence

Clip: Open Science as a Solution

Closed Caption Version

Audio Description Version

Sketch Notes

Session 5: Government Roundtable

Clip: Pre-Analysis Plans

Audio Description Version

Sketch Notes